1 of 4 | Scientists from the University of California at Berkeley are using the 500-meter Aperture Spherical Telescope in China to check out a final batch of 100 candidate “ET” radio signals detected through the “SETI@home” program. File Photo by STR/EPA

ST. PAUL, Minn., Jan. 30 (UPI) — One of the longest-running searches for extraterrestrial life is coming to end this year as U.S. scientists wrap up a popular program that enlisted millions of home computer users to analyze radio signals received from space.

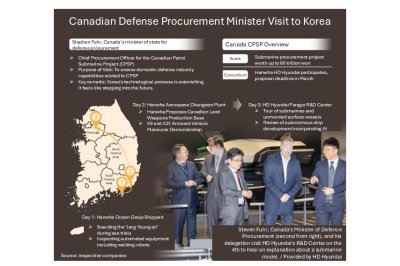

After years poring through immense amounts of generated data, the program’s co-founders at the University of California at Berkeley told UPI this week they are probing 100 detected signals deemed to be the best candidates for messages from “ET” before the effort is wrapped up for good, 27 years after it was launched.

But even though the “SETI@home” project has so far failed to record a “first contact” from an alien civilization, its leaders say valuable lessons have been learned that can be applied to the continuing hunt for beyond Earth.

SETI@home, short for Search for Extraterrestrial Intelligence, was launched in 1999 by scientists at UC Berkeley who over the course of two-plus decades enlisted more than 5 million “crowdsourced” volunteers willing to donate their home computers’ processing capacity to analyze data generated by momentary energy blips picked up by the Arecibo Observatory in Puerto Rico.

It was one of the pioneering efforts at distributed computing in an era before supercomputers and high-speed Internet connections. Under the project, home users downloaded and installed free software that could pick out signals deemed to be “ET” candidates from raw data supplied by the 1,000-foot radio telescope at Arecibo, which collapsed in 2020

The observatory was damaged by Hurricane Maria in 2017 and rebuilt, but it met its end a little more than three years later because filled spelter sockets that anchored the massive support cables had been undergoing long-term chemical and mechanical degradation.

The data was collected over a period of 14 years and covered almost the entire sky visible to the telescope as its operators performed other tasks, such as mapping solar system bodies and discovering pulsars.

From its data, the home computer users ultimately produced 12 billion detections. The vast majority turned out to be radio frequency interference from man-made sources, such as satellites and earthbound radio and television broadcasts, but researchers for years continued to doggedly plow through the possibilities.

Billions of “candidate” radio signals narrowed to final 100

Project co-founder David Anderson of UC Berkeley’s Space Sciences Laboratory said he and his team spent a decade narrowing down that massive list to 1 million candidates and then to a final 100, which are now being investigated using China’s 500-meter Aperture Spherical Telescope, also known as FAST, in hopes of finding them again.

And after that’s completed, the long-running program will officially be a wrap, in part because it has now reached point of diminishing scientific returns.

“The output of the first two phases of SETI@home were millions of what we call signal candidates, which are basically collections of momentary bursts of energy from the same place in the sky at about the same frequency, but possibly spread over many years,” Anderson told UPI.

“And of course, there was a lot of work involved in removing the man-made interference from from these things and ranking them, because at some point we had to go through them and manually inspect the signal candidates to get rid of the ones that are obviously interference.

“A lot of that we could do by using computer algorithms we developed, but in the end, we had to look at these signals ourselves.”

To guide the development of those algorithms, Anderson and his team used artificial candidates, or “birdies,” that modeled persistent ET signals within a range of power and bandwidth parameters. The birdies were introduced blindly, allowing the team to gauge how sensitive their detection system was.

The only reason they were able to generate the initial billions of candidate signals was due to the small processors provided the home-based volunteers, whose response at the start of effort in the late ’90s was overwhelming, Anderson said.

“Whether there is extraterrestrial life is kind of the most important unanswered scientific question at this point, and so I think we knew that we’d get some users,” he said. “We banked on, I think, 50,000 people initially, which we thought we’d need to keep up with the stream of data from Arecibo.

“We got a lot of national media coverage at right at the beginning, and within the first year we had close to 1 million participants. We actually had to scramble to figure out ways to use that surplus of computing power effectively.”

UC Berkeley research astronomer Eric Korpela, another co-founder of the program, said he felt a keen “sense of accomplishment” with SETI@home, both in the sense of technical achievements — such as in vastly increasing the sensitivity of signal detection over existing spectroscopic methods — and in how it demonstrated the intensity of worldwide public interest in the search for ET.

“We encountered a lot of resistance from the SETI community when we first started started this,” he told UPI. “Whenever you start a project with a large public-facing component, there’s always the fear in a lot of peoples’ minds that you are going to do something wrong and you’re going to turn people off the entire field.

“But, of course, I think that wasn’t the case. Instead, this really engaged the public imagination, and I don’t think that we’re necessarily done with that. Someone could again tap into that sense of fascination that people have about the search for extraterrestrial life.”

Many people still want to have a connection to this sort of science, Korpela said, adding, “I think that is really a large part of our legacy.”

Others praise, assess impact of SETI@home

Other researchers and organizations deeply involved in the search for extraterrestrial life also praised the accomplishments and legacy of SETI@home as it wraps up its mission.

One of them is the National Science Foundation National Radio Astronomy Observatory and Green Bank Observatory in West Virginia, trailblazers in radio astronomy and operators of Breakthrough Listen, described as the largest ever scientific research program aimed at finding evidence of civilizations beyond Earth.

Observatory public information officer Jill Malusky noted that her organization and UC Berkeley’s SETI Research Center worked together on SETI@home, and that its winding down won’t sever that relationship.

“The NSF NRAO/GBO are big supporters of citizen science projects, and we’re excited about the impact of SETI@home’s legacy through the tireless work of its volunteers, and for the public recognition SETI can bring to efforts like these,” she told UPI.

“The search for techno-signatures and extraterrestrial life is a very exciting part of the scientific research that the NSF NRAO’s telescopes can do — and it’s one of the accessible areas for the public to understand.”

Most staffers who work at the West Virginia observatories were drawn there “by the same curiosity we all have when we look up at the universe — are we the only ones here? Is anyone else out there?” she said.

“While what we find with our telescopes may not be as dramatic as we hope, like a sci-fi movie, it’s still exciting to have our work overlap with the search.”

Similarly, prominent astrobiologist and SETI researcher Douglas Vakoch said SETI@home revolutionized the search for life in the universe by solving one of the greatest challenges of looking for intelligence in space, and that by doing so “directly inspired a new generation of researchers who are attempting first contact by sending powerful radio messages to the stars.”

Vakoch is president of METI International, a nonprofit research and educational organization dedicated to messaging extraterrestrial intelligence, and editor of many academic works in several fields.

He told UPI that SETI@home was a breakthrough in that it was able to combine “mainstream astronomy” with the search for extraterrestrials, which researchers must “constantly struggle to justify” as they seek precious telescope time.

“With SETI@home, scientists did both,” Vakoch said. “As astronomers pointed the Arecibo radio telescope at targets of their choice, SETI@home also analyzed the incoming data, but this time for signals that can’t be created by nature. SETI@home was designed so scientists could conduct mainstream astronomy and simultaneously determine whether we’re alone in the universe.”

in that way, instead of becoming an obstacle to astronomers seeking time on the world’s largest radio telescope, SETI@home “helped foster public support and recognition for space science.”

Its greatest legacy, he said, is that it is now “guiding the next generation of interstellar communication,” including Vakoch’s own METI project, which rather than listening for radio signals from space as SETI does, reverses the process by sending powerful radio signals to nearby stars in the hope of eliciting a response from an advanced civilization.

Despite thus far coming away empty-handed in the search for ET, the SETI@home project nonetheless provided many valuable insights, Anderson said.

“It was a ‘whole sky’ project that covered the everything visible from Arecibo, and there’s there’s a lot of technical things that we did, some of which were right and others we would do differently if we had to go back,” he said.

“So we learned a lot of lessons about how to do radio astronomy, and we published two papers last year describing them.”

He added that the powerful distributed computing system established for SETI@home can be used in the future for research in related areas such as cosmology and pulsars, or even for medical research.

European Union regulators say TikTok may be in violation of the Digital Services Act due to the risk it poses for addictive and compulsive behavior.

European Union regulators say TikTok may be in violation of the Digital Services Act due to the risk it poses for addictive and compulsive behavior.