With both major party presidential nominations sewn up, we’re deep into the season in which fretting over polls can become an obsession. That’s especially true this year, as former President Trump holds a small but persistent edge over President Biden in most national and swing-state surveys.

That’s led many Democrats to search deep into the innards of polls in an often self-deluding search for error.

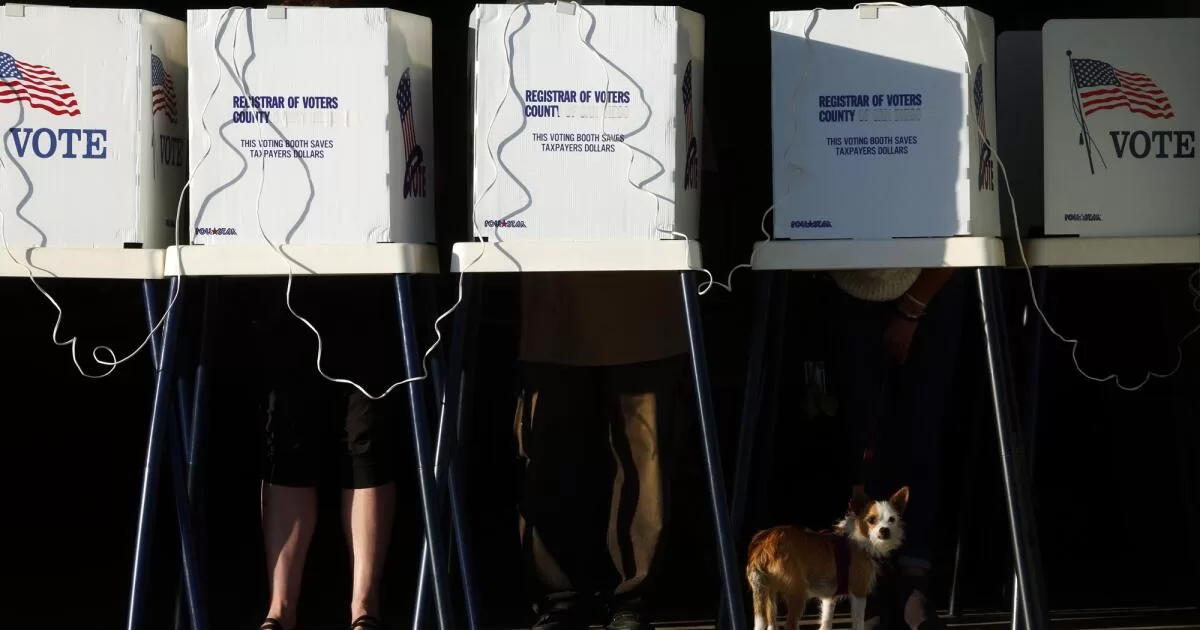

The fact is, polls continue to get election results right the vast majority of the time. They’re also an indispensable tool for democracy — informing residents of a vast and varied nation what their fellow Americans believe.

At the same time, errors do exist, often involving either problems collecting data or troubles interpreting it.

This week, let’s examine a couple of examples and take a look at how L.A. Times polls did this primary season.

A Holocaust myth?

In December, the Economist published a startling poll finding: “One in five young Americans thinks the Holocaust is a myth,” the headline said.

Fortunately for the country, although perhaps not for the publication, it’s the poll finding that may have been mythical.

In January, the nonpartisan Pew Research Center set out to see if it could replicate the finding. They couldn’t. Pew asked the same question the Economist poll asked and found that the share of Americans ages 18-29 who said the Holocaust was a myth was not 20%, but 3%.

What’s going on?

The problem isn’t a bad pollster: YouGov, which does the surveys for the Economist, is among the country’s most highly regarded polling organizations. But the methodology YouGov uses, known in the polling world as opt-in panels, can be victimized by bogus respondents. That may have been the case here.

Newsletter

Get our Essential Politics newsletter

The latest news, analysis and insights from our politics team.

You may occasionally receive promotional content from the Los Angeles Times.

Panel surveys are a way to solve a big problem pollsters face: Very few people these days will answer phone calls from unknown numbers, making traditional phone-based surveys extremely hard to carry out and very expensive.

Rather than randomly call phone numbers, polling organizations can solicit thousands of people who will agree to take surveys, usually in return for a small payment. For each survey, the pollsters select people from the panel to make up a sample that’s representative of the overall population.

Some people join simply for the money, however, then may speed through, answering questions more or less at random. Previous research by Pew has found that such bogus respondents most often claim to belong to groups that are hard to recruit, including young people and Latino voters.

Pollsters have found evidence of organized efforts to infiltrate panels, sometimes involving “multiple registrations from people who are outside the U.S.,” Douglas Rivers, the chief scientist at YouGov and a political science professor at Stanford, wrote in an email. Those could be efforts to bolster particular causes or candidates or, more often, schemes to make money by collecting small sums over and over again.

“We have a whole host of procedures to screen out these panelists,” Rivers wrote, adding that the firm was continuing to analyze what happened with the Holocaust question.

On polls of close elections, bogus respondents answering at random will usually “more or less cancel each other out,” said Andrew Mercer, senior research methodologist at Pew.

“But for something that’s very rare, like Holocaust denial,” random responses will produce error that is all on one side. “It’s going to end up inflating the incidence,” he said.

In previous research for example, Pew found that 12% of respondents in opt-in survey panels who said they were under 30 also claimed that they were licensed to operate a nuclear submarine.

The lesson here is an old one, popularized by the late astronomer Carl Sagan: “Extraordinary claims require extraordinary evidence.” If a poll result seems just too startling to be true, there’s a good chance it isn’t.

Leaping to conclusions

A second category of potential problems doesn’t involve the data so much as the way people, especially us journalists, interpret them — drawing definitive conclusions from less than definitive numbers.

Consider the question of how much progress Republicans are making among Black and Latino voters.

There’s no question, as I’ve previously written, that Republicans gained ground between 2016 and 2020, especially among Latino voters who already identified as conservatives. There was also smaller movement toward the GOP among Black voters.

Has that trend continued? Some recent surveys, including the widely cited New York Times/Siena College poll, indicate it may have accelerated. Biden has hemorrhaged support among younger Black and Latino voters, that poll has found.

In a recent article that drew a lot of attention, John Burn-Murdoch, the chief data journalist for the Financial Times, stitched together data from several different types of polls to declare that “American politics is in the midst of a racial realignment.”

The response from many political scientists and other analysts was, in effect, “Not so fast.”

Pre-election surveys can tell you what potential voters are thinking today, but comparing them with past election returns is dicey, they noted.

If the actual results in 2024 track what the New York Times/Siena polls are currently finding, “fine, let’s talk racial realignment,” said Vanderbilt University political science professor John Sides. Until then, however, “we have to wait and see.”

How we did

Our UC Berkeley Institute of Governmental Studies/Los Angeles Times polls had a notably good year predicting elections.

The final poll before this year’s primary showed, for example, that Proposition 1, the $6.4-billion mental health bond measure backed by Gov. Gavin Newsom, had support from 50% of likely voters.

As of Thursday morning, that was almost exactly where the “yes” vote stood — 50.2% — with almost 90% of the state’s votes counted.

The poll also correctly forecast that Democratic Rep. Adam B. Schiff of Burbank and Republican former Dodgers player Steve Garvey would be the top two finishers in the primary for Senate, with Democratic Rep. Katie Porter of Irvine in third place.

In the survey, taken about a week before the election, 9% of voters remained undecided. Among those who had made up their minds, Garvey had 30% of the vote, Schiff 27% and Porter 21%, the poll found.

The poll appears to have been very close on Garvey’s number — with about 800,000 votes still to count, he has 32%, well within the poll’s estimated margin of error of 2 percentage points in either direction. The survey slightly understated backing for Schiff, who also has 32%, and overstated support for Porter, who currently sits at 15%. That could mean that final group of undecided voters broke for Schiff.

That level of accuracy is not uncommon. In the 2022 midterms, for example, polls by nonpartisan groups, universities and media organizations were extremely accurate.

There’s a takeaway in all this for people interested in politics, especially in a hotly contested election year: Don’t over-focus on any individual poll, especially if it has a startling finding that hasn’t cropped up anywhere else. Be skeptical about sweeping conclusions about events that are still unfolding. And even, or maybe especially, when a poll shows your favored candidate trailing, take it for what it is — neither an oracle, nor a nefarious plot, but a snapshot in time.