Hyderabad and New Delhi, India – Bismillah Bee can’t conceive of owning a car. The 67-year-old widow and 12 members of her family live in a cramped three-room house in an urban slum in Hyderabad, the capital of the Indian state of Telangana. Since her rickshaw puller husband’s death two years ago of mouth cancer, Bee makes a living by peeling garlic for a local business.

But an algorithmic system, which the Telangana government deploys to digitally profile its more than 30 million residents, tagged Bee’s husband as a car owner in 2021, when he was still alive. This deprived her of the subsidised food that the government must provide to the poor under the Indian food security law.

Thus, when the COVID-19 pandemic was raging in India and her husband’s cancer had peaked, Bee was running between government authorities to convince them that she did not own a car and that she indeed was poor.

The authorities did not trust her – they believed their algorithm. Her fight to get her rightful subsidised food supply reached the Supreme Court of India.

Bee’s family is listed as being “below-poverty-line” in India’s census records. The classification allowed her and her husband access to the state’s welfare benefits, including up to 12 kilogrammes of rice at one rupee ($0.012) per kg as against the market price of about 40 rupees ($0.48).

India runs one of the world’s largest food security programmes, which promises subsidised grains to about two-thirds of its 1.4 billion population.

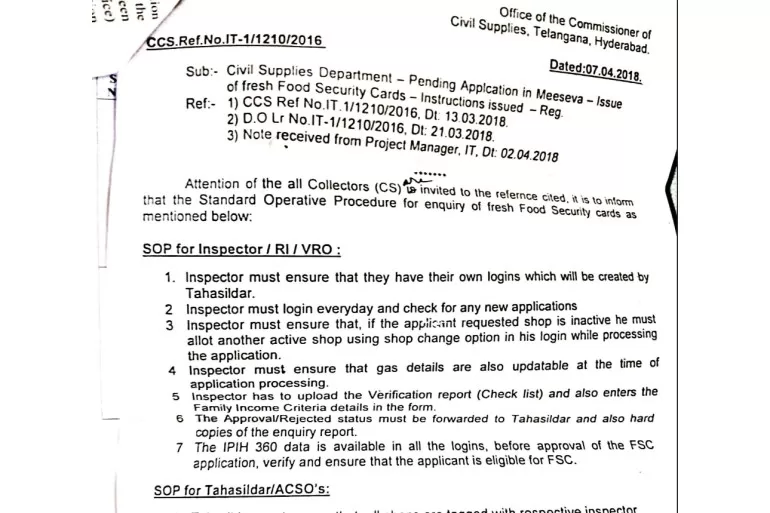

Historically, government officials verified and approved welfare applicants’ eligibility through field visits and physical verifications of documents. But in 2016, Telangana’s previous government, under the Bharat Rashtra Samithi party, started arming the officials with Samagra Vedika, an algorithmic system that consolidates citizens’ data from several government databases to create comprehensive digital profiles or “360-degree views”.

Physical verification was still required, but under the new system, the officials were mandated to check whether the algorithmic system approved the eligibility of the applicant before making their own decision. They could either go with the algorithm’s prediction or provide their reasons and evidence to go against it.

Initially deployed by the state police to identify criminals, the system is now widely used by the state government to ascertain the eligibility of welfare claimants and to catch welfare fraud.

In Bee’s case, however, Samagra Vedika mistook her late husband Syed Ali, the rickshaw puller, for Syed Hyder Ali, a car owner – and the authorities accepted the algorithm’s word.

Bee is not the only victim of such digital snafus. From 2014 to 2019, Telangana cancelled more than 1.86 million existing food security cards and rejected 142,086 fresh applications without any notice.

The government initially claimed that these were all fraudulent claimants of subsidy and that being able to “weed out” the ineligible beneficiaries had saved it large sums of money.

But our investigation reveals that several thousands of these exclusions were done wrongfully, owing to faulty data and bad algorithmic decisions by Samagra Vedika.

Once excluded, the onus is on the removed beneficiaries to prove to government agencies that they were entitled to the subsidised food.

Even when they did so, officials often favoured the decision of the algorithm.

Rise of welfare algorithms

India spends roughly 13 percent of its gross domestic product (GDP) or close to $256bn on providing welfare benefits including subsidised food, fertilisers, cooking gas, crop insurance, housing, and pensions among others.

But the welfare schemes have historically been plagued with complaints of “leakages”. In several instances, the government found, either corrupt officials diverted subsidies to non-eligible claimants, or fraudulent claimants misrepresented their identity or eligibility to claim benefits.

In the past decade, the federal and several state governments have increasingly relied on technology to plug these leaks.

First, to prevent identity fraud, they linked the schemes with Aadhaar, the controversial biometric-based unique identification number provided to every Indian.

But Aadhaar doesn’t verify the eligibility of the claimants. And so, over the past few years, several states have adopted new technologies that use algorithms – opaque to the public – to verify this eligibility.

Over the past year, Al Jazeera investigated the use and impact of such welfare algorithms in partnership with the Pulitzer Center’s Artificial Intelligence (AI) Accountability Network. The first part of our series reveals how the unfettered use of the opaque and unaccountable Samagra Vedika in Telangana has deprived thousands of poor people of their rightful subsidised food for years, further exacerbated by unhelpful government officials.

Samagra Vedika has been criticised in the past for its potential misuse in mass surveillance and risk to citizens’ privacy as it tracks the lives of the state’s 30 million residents. But its efficacy in catching welfare frauds has not been investigated till now.

In response to detailed questions sent by Al Jazeera, Jayesh Ranjan, principal secretary at the Department of Information Technology in Telangana, said that the earlier system of in-person verification was “based on the discretion of officials, opaque and misused and led to corruption. Samagra Vedika has brought in a transparent and accountable method of identifying beneficiaries which is appreciated by the Citizens.”

Opaque and unaccountable

Telangana projects Samagra Vedika as the pioneering technology in automating welfare decisions.

Posidex Technologies Private Limited, the company that developed Samagra Vedika, says on its website that “it can save a few hundred crores every year for the state government[s] by identifying the leakages”. Other states have hired it to develop similar platforms.

But neither the state government nor the company has placed in the public domain either Samagra Vedika’s source code – the written set of instructions that run a computer programme – or any other verifiable data to back their claims.

Globally, researchers argue that source codes of government algorithms must be independently audited so that the accuracy of their predictions can be ascertained.

The state IT department denied our requests under the Right to Information Act to share the source code and the formats of the data used by Samagra Vedika to make decisions, saying the company had “rights over” them. Posidex Technologies denied our requests for an interview.

Over a dozen interviews with state officials, activists and those excluded from welfare schemes, as well as perusal of a range of documents including bidding records, gave us a glimpse of how Samagra Vedika uses algorithms to triangulate a person’s identity in multiple government databases – as many as 30 – and combines all the information to build a person’s profile.

Samagra Vedika was initially built in 2016 for the Hyderabad Police Commissionerate to create profiles of people of interest. The same year, it was introduced in the food security scheme as a pilot, and by 2018, it was on-boarded for most of the state welfare schemes.

As per Telangana Food Security Rules, the food security cards are issued to the oldest woman member of families that have an annual income of less than 150,000 rupees ($1,823) in rural areas, and 200,000 rupees ($2,431) in urban areas.

The government also imposes additional eligibility criteria: the family should not possess a four-wheeler, and no family member should have a regular government or private job or own any business such as a shop, petrol pump or rice mill.

This is where Samagra Vedika comes into play. When a query is raised, it looks through the different databases. If it finds a match close enough violating any of the criteria, it tags the claimant as ineligible.

Errors and harm

Since there are huge variations in how names and addresses are recorded in different databases, Samagra Vedika uses “machine learning” and “entity resolution technology” to uniquely identify a person.

The government has previously said that the technology is of “high precision”; that “it never misses the right match” and gets the “least number of false positives”.

It has also said that the error of tagging a vehicle or a house to the wrong person happens in less than five percent of all cases and that it has corrected most of those errors.

But a Supreme Court-imposed re-verification of the rejected food cards shows the margin of error is much higher.

In April 2022, in a case initially filed by social activist SQ Masood on behalf of the excluded families, the apex court ordered the government to conduct field verification of all 1.9 million cards deleted since 2016.

Out of the 491,899 applications received for verification, the state had processed 205,734 until July 2022, the latest data publicly available. Out of those, 15,471 applications were approved, suggesting that at least 7.5 percent of the cards were wrongfully rejected.

“We found many genuinely needy families whose [food security card] applications were rejected due to mis-tagging,” said Masood, who works with the Association for Socio-Economic Empowerment of the Marginalised (ASEEM), a non-profit. “They were wrongfully [tagged] for having four-wheelers, paying property tax or being government employees.”

The petition lists around 10 cases of wrongful exclusions. Al Jazeera visited three of those and can confirm they were wrongly tagged by Samagra Vedika.

David Nolan, a senior investigative researcher at the Algorithmic Accountability Lab at Amnesty International, says the rationale for deploying systems like Samagra Vedika in welfare delivery is predicated on there being a sufficient volume of fraudulent applicants, which, in practice, is likely to increase the number of incorrect matches.

A false positive occurs when a system incorrectly labels a legitimate application as fraudulent or duplicative, and a false negative occurs when the system labels a fraudulent or duplicative application as legitimate. “Engineers will have to strike a balance between these two errors as one cannot be reduced without risking an increase to the other,” said Nolan.

The consequences of false positives are high as this means an individual or family is wrongly denied essential support.

“While the government claims to minimise these in the use of Samagra Vedika, these systems also come at a financial cost and the state has to demonstrate value for money by finding enough fraudulent or duplicative applications. This incentivises governments using automated systems to reduce the number of false negatives, leading the false positive rate to likely increase – causing eligible families to be unjustly excluded from welfare provision,” added Nolan.

Ranjan dismissed the concerns as “misgivings” and said all states try to “minimise inclusion errors and exclusion errors in all welfare programmes … Telangana Government has implemented a project called Samagra Vedika using latest technologies like Big data etc for the same objectives”.

He added that the government had followed the same practise in acquiring Samagra Vedika as it does for other software programmes via an open tender and that the use of Samagra Vedika has been verified by different departments for different sample sizes in various locations that found “very high levels of accuracy”. This, he said, was further boosted by field verifications by different departments.

Algorithms over humans

After Bee’s food security card was cancelled in 2016, the authorities sat on her renewal application for four years before finally rejecting it in 2021 because they claimed she possessed a four-wheeler.

“If we had money to buy a car, why would we live like this?” Bee asks. “If the officials came to my house, perhaps they would also see that. But nobody visited us.”

With help from ASEEM, the non-profit, she dug out the registration number of the vehicle her husband supposedly owned and found its real owner.

When Bee presented the evidence, the officials agreed there was an algorithmic error but said that her application could not be considered because her total family income exceeded the eligibility limit even though that was not the case.

Bee was also eligible for the subsidised grains based on other criteria including being a widow, being aged 60 years or more, and being a single woman “with no family support” or “assured means of subsistence”.

The Supreme Court petition lists cases of at least six other women who were denied the benefits of the food scheme without stating any reason or for wrong reasons such as possession of four-wheelers, which they never owned.

This includes excluded beneficiary Maher Bee, who lives in a rented apartment in Hyderabad with her husband and five children. Polio left her husband paralysed in the left leg and unable to drive a car. The family applied for a food security card in 2018 but was rejected in 2021 for “possessing a four-wheeler” even though they do not own one.

“Instead of three rupees, we spend 10 for every kilogramme of rice,” said Maher Bee. “We buy the same rice given under the scheme, from dealers who siphon it off and sell it at higher prices.”

“There is no accountability whatsoever when it comes to these algorithmic exclusions,” said Masood from ASEEM. “Previously, aggrieved people could go to local officials and get answers and guidance, but now the officials just don’t know … The focus of the government is to remove as many people from the beneficiary lists as possible when the focus should be that no eligible beneficiary is left without food.”

In November, the High Court of Telangana in a case filed by Bismillah Bee said that “the petitioner is eligible” for the food security card whenever the state government issues new cards. Bee, who has been denied ration for more than seven years, is still waiting.

(Tomorrow brings Part 2 of the series – when an algorithm declares living people dead)

Tapasya is a member of The Reporters’ Collective; Kumar Sambhav was the Pulitzer Center’s 2022 AI accountability fellow and is India research lead with Princeton University’s Digital Witness Lab; and Divij Joshi is a doctoral researcher at the Faculty of Laws, University College London.